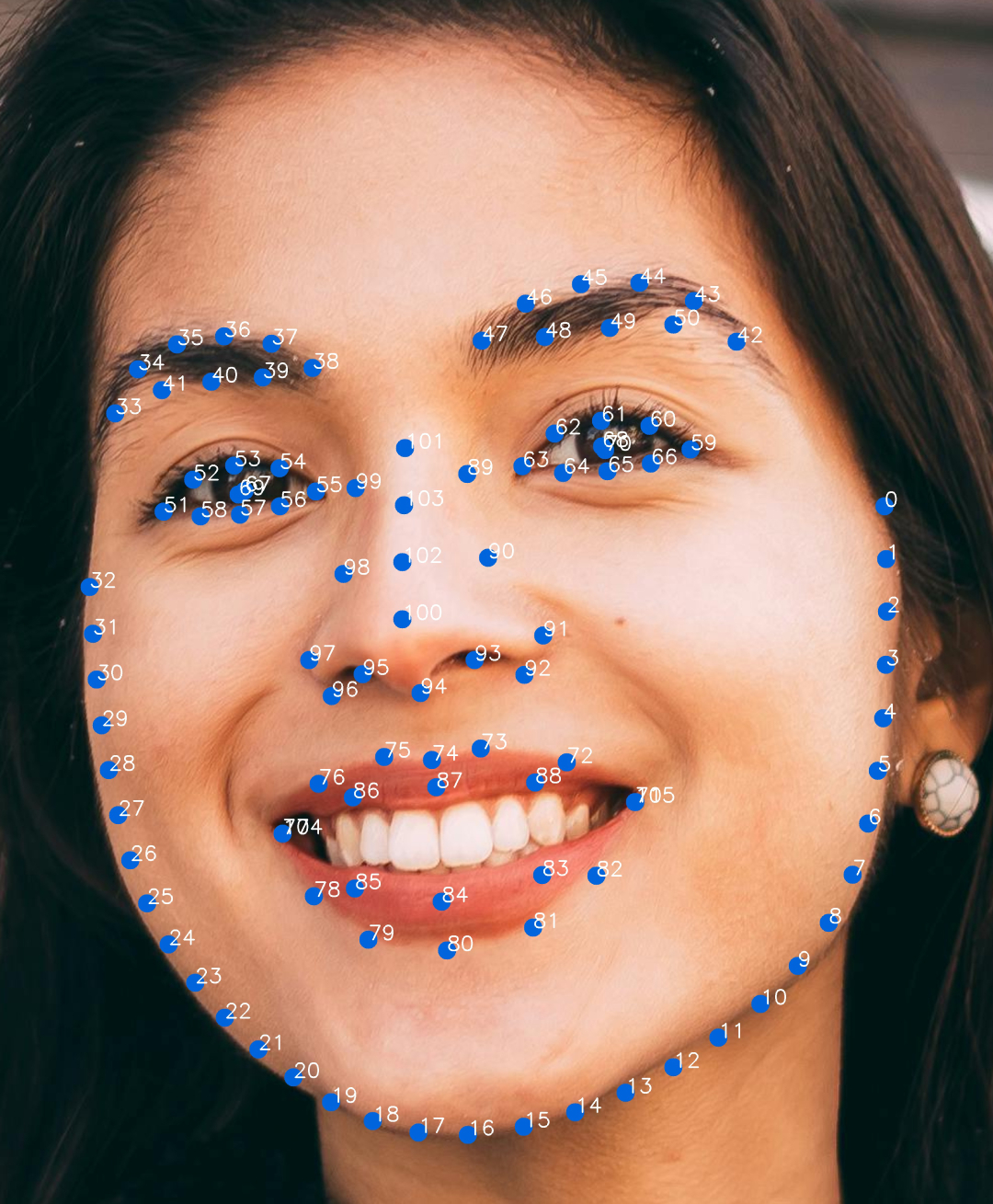

Dense Facial Landmark Prediction

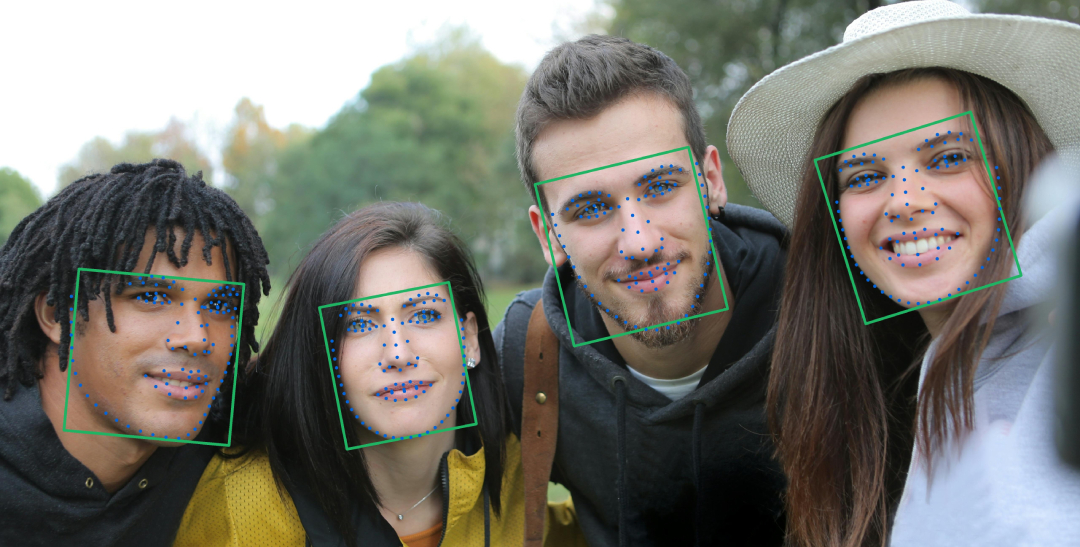

We offer the latest HyperLandmarkV2, a high-precision facial landmark detection model optimized for mobile devices. It is designed for seamless integration with AR cameras, beauty filters, and skin analysis applications. On mid-range iOS and Android devices, it achieves an average inference speed of 1ms per frame, delivering real-time performance without compromising accuracy.

Usage

Python

faces = session.get_face_dense_landmark(image)

for face in faces:

landmarks = session.face_landmark(face)

C

HInt32 numOfLmk;

HFGetNumOfFaceDenseLandmark(&numOfLmk);

HPoint2f* denseLandmarkPoints = (HPoint2f*)malloc(sizeof(HPoint2f) * numOfLmk);

HFGetFaceDenseLandmarkFromFaceToken(multipleFaceData.tokens[index], denseLandmarkPoints, numOfLmk);

C++

auto dense_landmark = session->GetFaceDenseLandmark(result);

Android

Point2f[] lmk = InspireFace.GetFaceDenseLandmarkFromFaceToken(multipleFaceData.tokens[0]);

Landmark Points Order

We provide a set of dense facial landmarks based on a 106-point standard. The following diagram shows the mapping between landmark indices and their corresponding facial regions.